SoftBank developing AI agents to make each worker like a ‘thousand-armed deity’

SoftBank boss Son Masayoshi said on Wednesday that his team is working on a first-of-its-kind system in which AI agents will be able to self-replicate

“Hey ChatGPT, left-click on the enter password field in the pop-up window appearing in the lower left quadrant of the screen and fill XUS&(#($J, and press Enter.”

Fun, eh? No, thanks. I’ll just move my cheap mouse and type the 12 characters on my needlessly clicky keyboard, instead of speaking the password out loud in my co-working space.

It’s pretty cool to see ChatGPT understand your voice command, book a cheap ticket for eight people to watch a Liverpool match at Anfield, and land you at the checkout screen. But hey, will you trust it with the password? Or, won’t you just type the password with a physical keyboard?

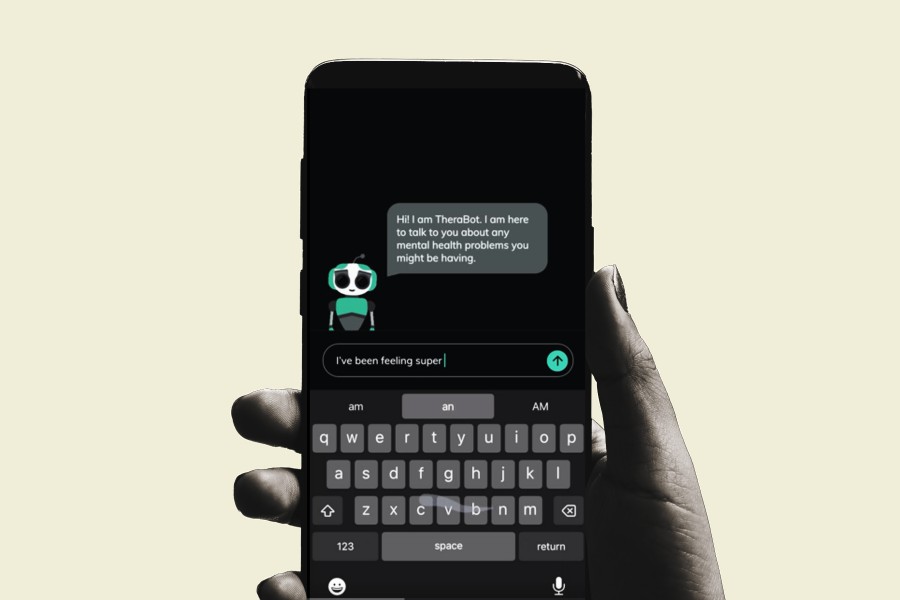

Imagine going all-in on AI, only to realize that the last-mile step, where you REALLY need a keyboard or mouse, is not possible, and you’re now stuck. But that’s exactly the question many have been asking after seeing flashy AI agents and automation videos from the likes of Google, OpenAI, and Anthropic.

AI was the overarching theme at Google’s I/O event earlier this year. By the end of the keynote, I was convinced that Android smartphones are not going to be the same again. And by that extension, any platform where Gemini is going to land — from Workspace apps such as Gmail to navigation on Google Maps while sitting in a car.

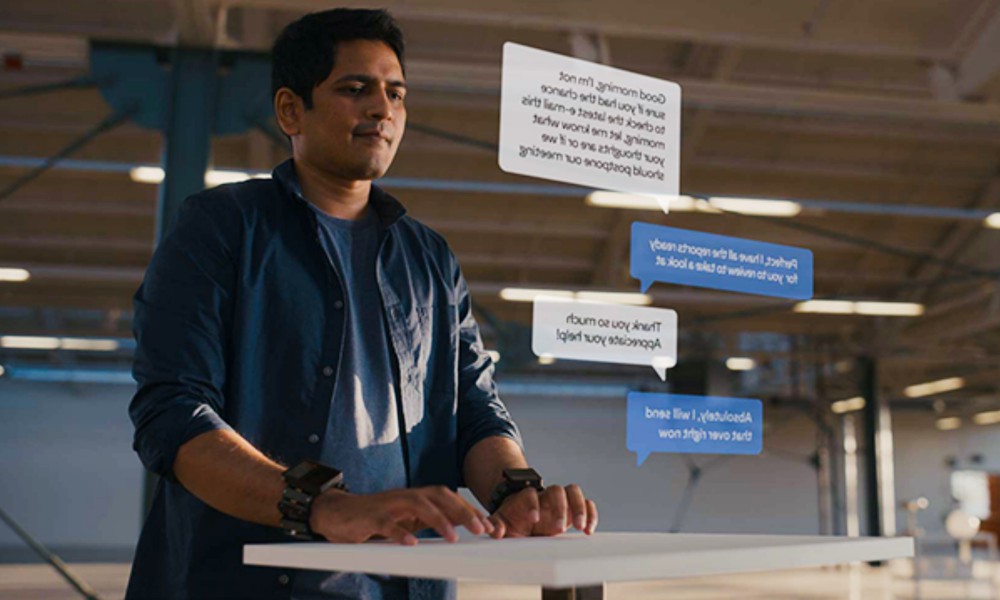

The most impressive demo was Project Mariner, and the next research prototype of Project Astra. Think of it as a next-gen conversational assistant that will have you talk and get real stuff done, without ever tapping on the screen or pulling up the keyboard. You can shift your queries from a user manual hosted on a brand’s website to instructional YouTube videos, without ever repeating the context.

It’s almost as if the true concept of memory has arrived for AI. In a web browser, it’s going to book you tickets, landing you on the final page where you simply have to confirm if all the details are as requested, and you proceed with the payment. That leads one to wonder whether the keyboard and mouse are dead concepts for digital inputs as voice interactions come to the forefront of AI.

Now, as odd as that sounds, your computer already comes with voice-based control for navigating through the operating system. On Windows PCs and macOS, you can find the voice access tools as part of the accessibility suite. There are a handful of shortcuts available to speed up the process, and you can create your own, as well.

With the advent of next-gen AI models, we’re talking about ditching the keyboard and mouse for everyone, and not just pushing it as an assistive technology.

Imagine a combination of Claude Computer Use and the eye-tracked input from Apple’s Vision Pro headset coming together. In case you’re unfamiliar, Anthropic’s Computer Use is a, well, computer use agent. Anthropic says it lets the AI “use computers the way people do—by looking at a screen, moving a cursor, clicking buttons, and typing text.”

Now, think of a scenario where your intent is given as voice to Claude, picked up by the onboard mics, and the task is executed. For whatever final step is required of you, gestures fill the gap. The Vision Pro has demonstrated that eye-tracked controls are possible and work with a high degree of accuracy.

Away from headsets, voice-controlled AI can still work on an average computer. Hume AI, in partnership with Anthropic, is building a system called Empathetic Voice Interface 2 (EVI 2) that turns voice commands into computer input. It’s almost like talking to Alexa, but instead of ordering broccoli, the AI assistant understands what we are saying and turns it into keyboard or mouse input.

All that sounds terrific, but let’s think of a few realistic scenarios. You will need a keyboard for fine-tuned media edits. Making minor changes to a coding canvas. Filling cells in a sheet. Imagine saying, “Hey Gemini, put four thousand eight hundred and ninety-five dollars in cell D5 and label it as air travel expense?” Yeah, I know. I’d just type it, too.

If you go through demos of AI Mode in Search, the Project Mariner agent, and Gemini Live, you will get a glimpse of voice computing. All these AI advancements sound stunningly convenient, until they’re not. For example, at what time does it get too irritating to say things like “Move to the dialog box in the top-left corner and left click on the blue button that says Confirm.”

It’s too cumbersome, even if all the steps before it were performed autonomously by an AI.

And let’s not forget the elephant in the room. AI has a habit of going haywire. “At this stage, it is still experimental—at times cumbersome and error-prone,” warns Anthropic about Claude Computer Use. The situation is not too dissimilar from OpenAI’s Operator Agent, or a similar tool of the same name currently in development at Opera, the folks behind a pretty cool web browser.

Removing the keyboard and mouse from an AI-boosted computer is like driving a Tesla with full self-driving (FSD) enabled, but you no longer have the steering and the controls available are the brake and accelerator pedals. The car is definitely going to take you somewhere, but you need to take over if some unexpected event transpires.

In the computing context, think of the troubleshooter, where you MUST be in the driving seat. But let’s assume that an AI model, driven primarily by voice (and captured by the mic on your preferred computing machine), lands you at the final step where you need to close the workflow, like making a payment.

Even with Passkeys, you will need to at least confirm your identity by entering the password, opening an authenticator app, or touching a fingerprint sensor? No OS-maker or app developer (especially dealing with identity verification) would let an AI model have open control over handling this critical task.

It’s just too risky to automate with an AI agent, even with conveniences like Passkeys coming into the picture. Google often says the Gemini will learn from memory and your own interactions. But it all begins with actually letting it monitor your computer usage, which is fundamentally reliant on keyboard and mouse input. So yeah, we’re back to square one.

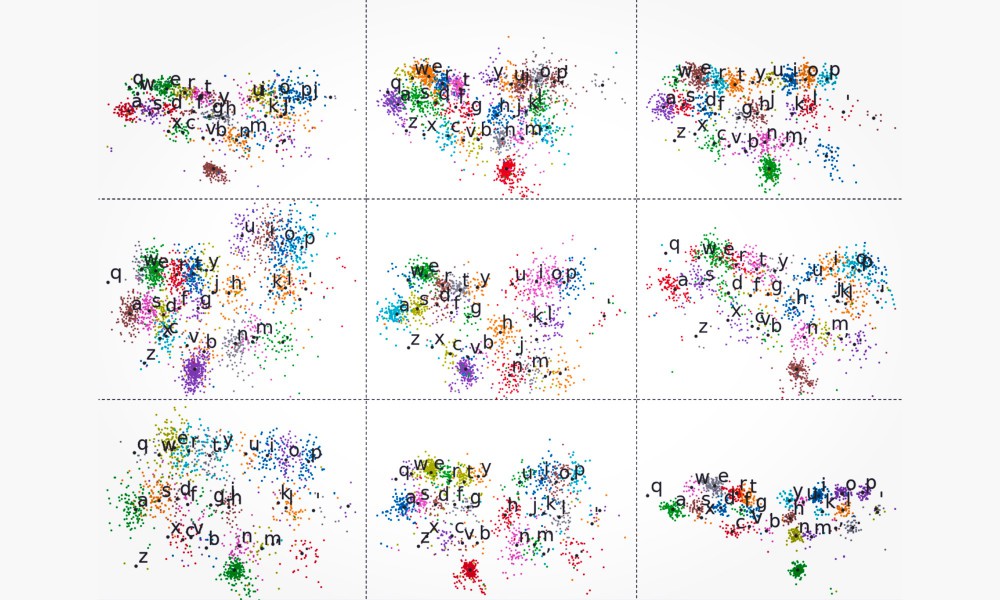

When we talk about replacing the computer mouse and keyboard with AI (or any other advancement), we are merely talking about substituting them with a proxy. And then landing at a familiar replacement. There is plenty of research material out there talking about virtual mice and keyboard, dating back at least a decade, long before the seminal “transformers” paper was released and pushed the AI industry into the next gear.

In 2013, DexType released an app that tapped into the tiny Leap Motion hardware to enable a virtual typing experience in the air. No touch screen required, or any fancy laser projector like the Humane AI Pin. Leap Motion died in 2019, but the idea didn’t. Meta is arguably the only company that has a realistic software and hardware stack ready for an alternative form of input-output on computing, something it calls human-computer interaction (HCI).

The company has been working on wrist-worn wearables that enable an entirely different form of gesture-based control. Instead of tracking the spatial movement of fingers and limbs, Meta is using a technique called electromyography (EMG). It turns electrical motor nerve signals generated in the wrist into digital input for controlling devices. And yes, cursor and keyboard input are very much part of the package.

At the same time, Meta also claims that these gestures will be faster than a typical key press, because we are talking about electrical signals traveling from the hand straight to a computer, instead of finger movement. “It’s a much faster way to act on the instructions that you already send to your device when you tap to select a song on your phone, click a mouse or type on a keyboard today” says Meta.

There are two problems with Meta’s approach, with or without AI coming into the picture. The concept of a cursor is still very much there, and so is the keyboard, even though in a digital format. We are just switching from the physical to virtual. The replacement being pushed by Meta sounds very futuristic, especially with Meta’s multi-modal Llama AI models coming into the picture.

Then there’s the existential dilemma. These wearables are still very much in the realm of research labs. And when they come out, they won’t be cheap, at least for the first few years. Even barebones third-party apps like WowMouse are bound to subscriptions and held back by OS limitations.

I can’t imagine ditching my cheap $100 keyboard with an experimental device for voice or gesture-based input, and imagine it replacing the full keyboard and mouse input for my daily workflow. Most importantly, it will take a while before developers embrace natural language-driven inputs into their apps. That’s going to be a long, drawn-out process.

What about alternatives? Well, we already have apps such as WowMouse, which turns your smartwatch into a gesture recognition hub for finger and palm movements. However, it only serves as a replacement for cursor and tap gestures, and not really a full-fledged keyboard experience. But again, letting apps access your keyboard is a risk that OS overlords will protest. Remember keyloggers?

At the end of the day, we are at a point where the conversational capabilities of AI models and their agentic chops are making a huge leap. But they would still require you to go past the finish line with a mouse click or a few key presses, instead of fully replacing them. Also, they’re just too cumbersome when you can hit a keyboard shortcut or mouse instead of narrating a long chain of voice commands.

In a nutshell, AI will reduce our reliance on physical input, but won’t replace it. At least, not for the masses.

SoftBank boss Son Masayoshi said on Wednesday that his team is working on a first-of-its-kind system in which AI agents will be able to self-replicate

The man leading one of the most prominent and most powerful AI companies on the planet has just revealed what it is about AI that keeps him awake at n

OpenAI has just announced that ChatGPT received a major upgrade to its memory features. The chatbot will now be able to remember a lot more about you,

AI is being heavily pushed into the field of research and medical science. From drug discovery to diagnosing diseases, the results have been fairly en

Google has announced a wide range of upgrades for its Gemini assistant today. To start, the new Gemini 2.0 Flash Thinking Experimental model now allow

Apple has lately focused on giving the AirPods more of a wellness-focused makeover than hawking them as plain wireless earbuds. Late last year, the Ai

Finding relevant information on Gmail can be a daunting task, especially if you have a particularly buzzy inbox. Right now, the email client uses a se

Over three months ago, Google started beta testing a new safety feature for Pixel phones that can sense signs of a fraud in voice calls using AI analy

We are a comprehensive and trusted information platform dedicated to delivering high-quality content across a wide range of topics, including society, technology, business, health, culture, and entertainment.

From breaking news to in-depth reports, we adhere to the principles of accuracy and diverse perspectives, helping readers find clarity and reliability in today’s fast-paced information landscape.

Our goal is to be a dependable source of knowledge for every reader—making information not only accessible but truly trustworthy. Looking ahead, we will continue to enhance our content and services, connecting the world and delivering value.