ChatGPT: everything you need to know about the AI chatbot

Artificial Intelligence, otherwise known as AI, has been dominating the news for the past few years, and of all of the potential chatbots you can use

Google has announced a wide range of upgrades for its Gemini assistant today. To start, the new Gemini 2.0 Flash Thinking Experimental model now allows file upload as an input, alongside getting a speed boost.

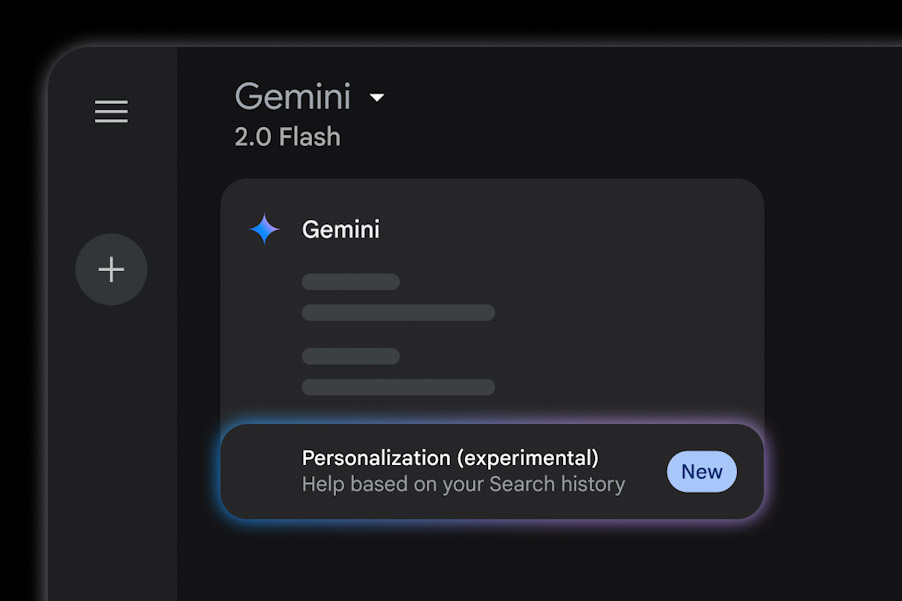

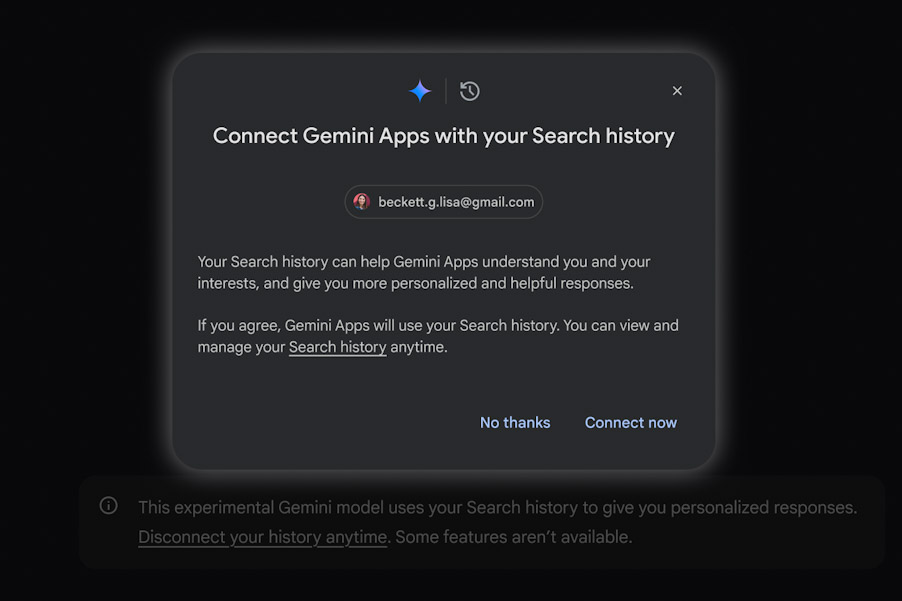

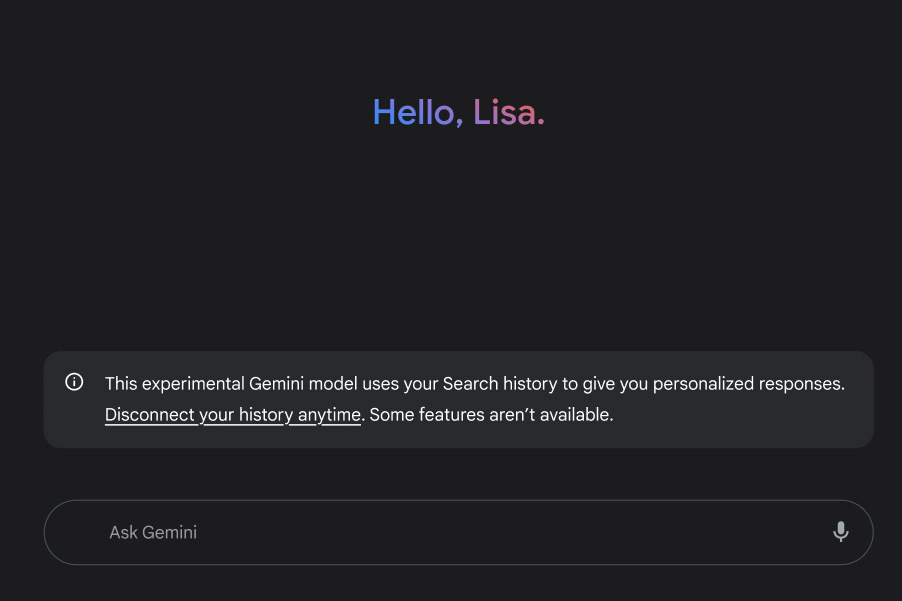

The more notable update, however, is a new opt-in feature called Personalization. In a nutshell, when you put a query before Gemini, it takes a peek at your Google Search history and offers a tailored response.

Down the road, Personalization will expand beyond Search. Google says Gemini will also tap into other ecosystem apps such as Photos and YouTube to offer more personalized responses. It’s somewhat like Apple’s delayed AI features for Siri, which even prompted the company to pull its ads.

Starting with the Google Search integration, if you ask the AI assistant about a few nearby cafe recommendations, it will check whether you have previously searched for that information. If so, Gemini will try to include that information (and the names you came across) in its response.

“This will enable Gemini to provide more personalized insights, drawing from a broader understanding of your activities and preferences to deliver responses that truly resonate with you,” says Google in a blog post.

The new Personalization feature is tied to the Gemini 2.0 Flash Thinking Experimental model, and will be available to free as well as paid users on a Gemini Advanced subscription. Rollout begins today, startling with the web version and will soon reach the mobile client, too.

Google says the Personalization facility currently supports more than 40 languages and it will be expanded to users across the globe. The feature certainly sounds like a privacy scare, but it’s an opt-in facility with the following guardrails:

To make the responses even more relevant, users can tell Gemini to reference their past chats, as well. This feature has been exclusive to Advanced subscribers so far, but it will be extended to free users worldwide in the coming weeks.

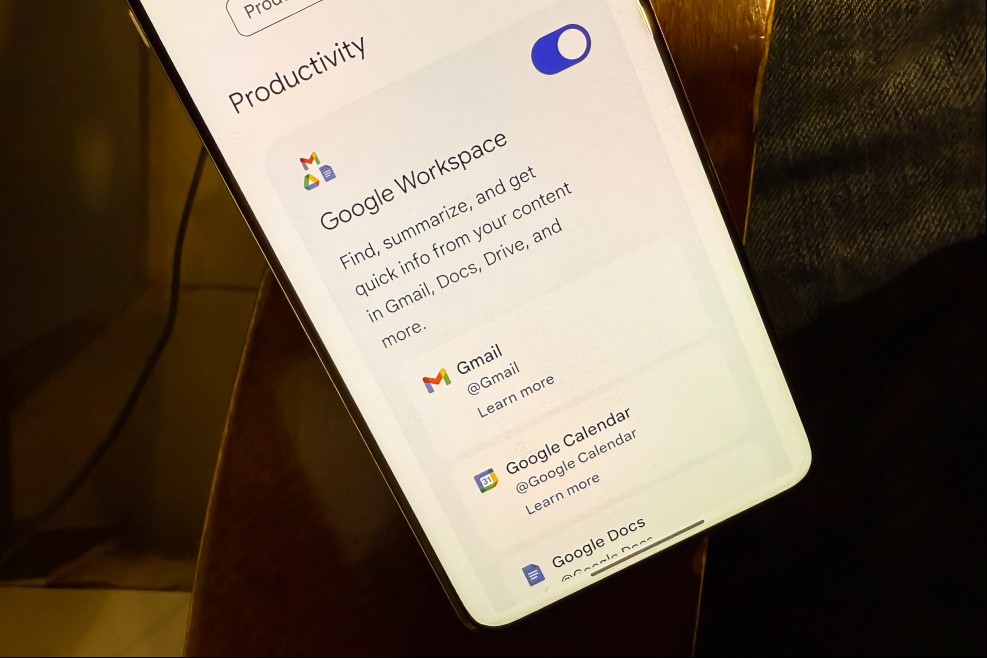

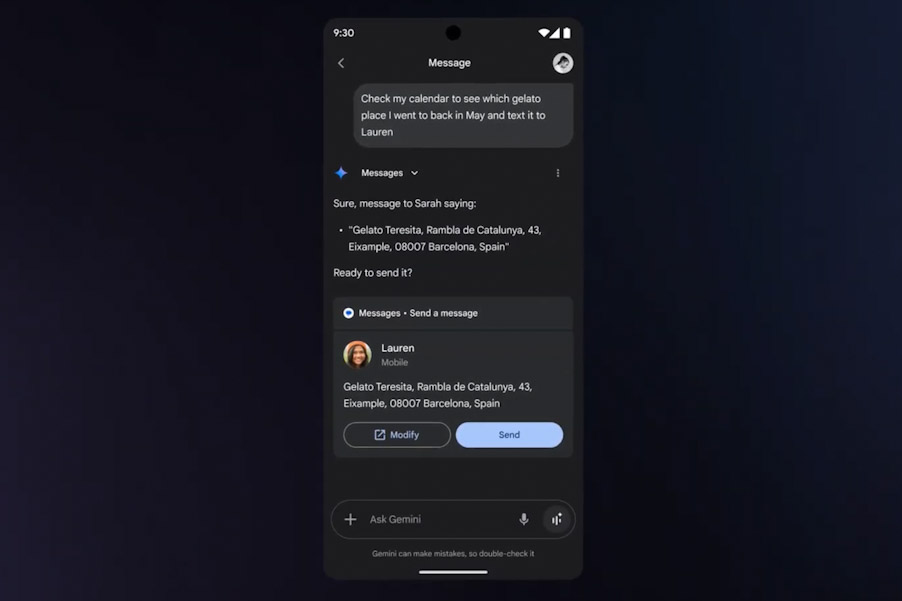

Gemini has the ability to interact with other applications — Google’s as well as third-party — using an “apps” system, previously known as extensions. It’s a neat convenience, as it allows users to get work done across different apps without even launching them.

Google is now bringing access to these apps within the Gemini 2.0 Flash Thinking Experimental model. Moroever, the pool of apps is being expanded to Google Photos and Notes, as well. Gemini already has access to YouTube, Maps, Google Flights, Google Hotels, Keep, Drive, Docs, Calendar, and Gmail.

Users can also enable the apps system for third-party services such as WhatsApp and Spotify, as well, by linking with their Google account. Aside from pulling information and getting tasks done across different apps, it also lets users execute multiple-step workflows.

For example, with a single voice command, users can ask Gemini to look up a recipe on YouTube, add the ingredients to their notes, and find a nearby grocery shop, as well. In a few weeks, Google Photos will also be added to the list of apps that Gemini can access.

“With this thinking model, Gemini can better tackle complex requests like prompts that involve multiple apps, because the new model can better reason over the overall request, break it down into distinct steps, and assess its own progress as it goes,” explains Google.

Moreover, Google is also expanding the context window limit to 1 million tokens for the Gemini 2.0 Flash Thinking Experimental model. AI tools such as Gemini break down words into tokens, with an average English language word translating to roughly 1.3 tokens.

The larger the token context window, the bigger the size of input allowed. With the increased context window, Gemini 2.0 Flash Thinking Experimental can now process much bigger chunks of information and solve complex problems.

Artificial Intelligence, otherwise known as AI, has been dominating the news for the past few years, and of all of the potential chatbots you can use

What’s happened? A supposed GPT-5 system prompt leaked via Reddit and GitHub this weekend. The prompt reveals the exact rules given to ChatGPT for int

If you find that ChatGPT’s Advanced Voice Mode is a little too keen to jump in when you’re engaged in a conversation, then you’ll be pleased to know t

If you’ve been dreaming about having a more in-depth conversation with one of your favorite gaming characters, it seems that Sony is working on making

MicrosoftMicrosoft Support announced an improvement to the Phone Connection app in a blog post. The update makes tasks like messaging, setting alarms,

Microsoft has today launched a dedicated Copilot app for Mac. For now, the app is only available for users in the US and UK, but it’s already loaded w

Mobile World Congress Read our complete coverage of Mobile World Congress The year 2025 has seen nearly every smartphone brand tout the virtues of art

We are a comprehensive and trusted information platform dedicated to delivering high-quality content across a wide range of topics, including society, technology, business, health, culture, and entertainment.

From breaking news to in-depth reports, we adhere to the principles of accuracy and diverse perspectives, helping readers find clarity and reliability in today’s fast-paced information landscape.

Our goal is to be a dependable source of knowledge for every reader—making information not only accessible but truly trustworthy. Looking ahead, we will continue to enhance our content and services, connecting the world and delivering value.