Tired of monthly payments? ChatGPT could soon offer a lifetime subscription

ChatGPT usage is more prevalent than ever, and its current model offers a monthly subscription of $20 for ChatGPT Plus or the mind-boggling steep $200

Even as OpenAI continues clinging to its assertion that the only path to AGI lies through massive financial and energy expenditures, independent researchers are leveraging open-source technologies to match the performance of its most powerful models — and do so at a fraction of the price.

Last Friday, a unified team from Stanford University and the University of Washington announced that they had trained a math and coding-focused large language model that performs as well as OpenAI’s o1 and DeepSeek’s R1 reasoning models. It cost just $50 in cloud compute credits to build. The team reportedly used an off-the-shelf base model, then distilled Google’s Gemini 2.0 Flash Thinking Experimental model into it. The process of distilling AIs involves pulling the relevant information to complete a specific task from a larger AI model and transferring it to a smaller one.

What’s more, on Tuesday, researchers from Hugging Face released a competitor to OpenAI’s Deep Research and Google Gemini’s (also) Deep Research tools, dubbed Open Deep Research, which they developed in just 24 hours. “While powerful LLMs are now freely available in open-source, OpenAI didn’t disclose much about the agentic framework underlying Deep Research,” Hugging Face wrote in its announcement post. “So we decided to embark on a 24-hour mission to reproduce their results and open-source the needed framework along the way!” It reportedly costs an estimated $20 in cloud compute credits, and would require less than 30 minutes, to train.

Hugging Face’s model subsequently notched a 55% accuracy on the General AI Assistants (GAIA) benchmark, which is used to test the capacities of agentic AI systems. By comparison, OpenAI’s Deep Research scored between 67 – 73% accuracy, depending on the response methodologies. Granted, the 24-hour model doesn’t perform quite as well as OpenAI’s offering, but it also didn’t take billions of dollars and the energy generation capacity of a mid-sized European nation to train.

These efforts follow news from January that a team out of University of California, Berkeley’s Sky Computing Lab managed to train their Sky T1 reasoning model for around $450 in cloud compute credits. The team’s Sky-T1-32B-Preview model proved the equal of early o1-preview reasoning model release. As more of these open-source competitors to OpenAI’s industry dominance emerge, their mere existence calls into question whether the company’s plan of spending half a trillion dollars to build AI data centers and energy production facilities is really the answer.

ChatGPT usage is more prevalent than ever, and its current model offers a monthly subscription of $20 for ChatGPT Plus or the mind-boggling steep $200

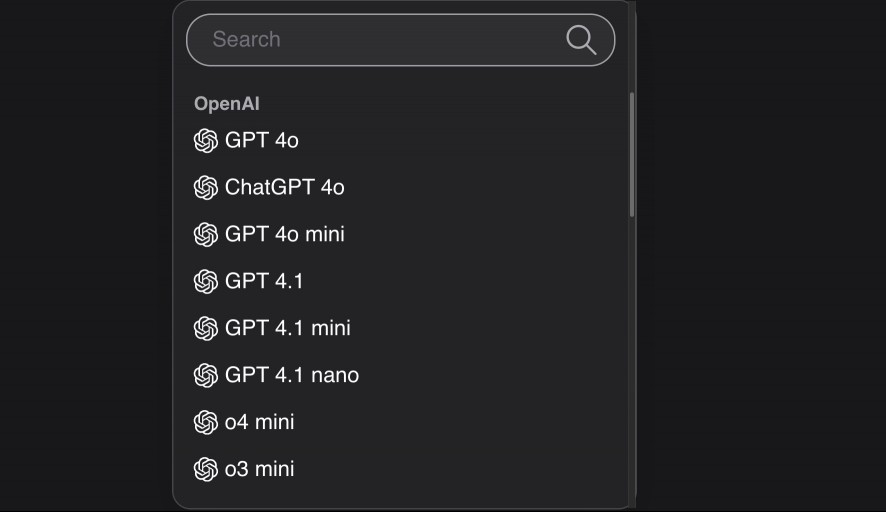

OpenAI has made GPT-4.1 more widely available, as ChatGPT Plus, Pro, and Team users can now access the AI model. On Wednesday, the brand announced tha

If you have a regular subscription, you’re likely well-versed in the dance of paying for something and wondering if it’s worth the value. For many peo

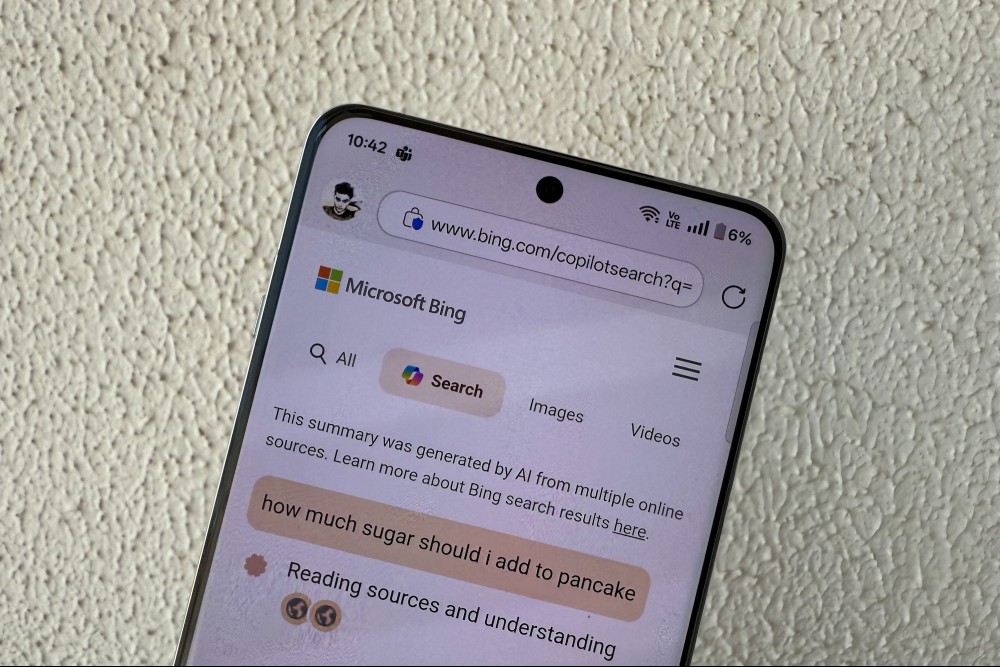

Late last year, Microsoft introduced a new AI feature called Copilot Vision for the web, and now it’s being made available on mobile devices. This fea

It feels like all of the big tech companies practically live in courtrooms lately, but it also feels like not much really comes of it. Decisions get m

At MWC 2025, Google confirmed that its experimental Project Astra assistant will roll out widely in March. It seems the feature has started reaching o

Barely a few weeks ago, Google introduced a new AI Search mode. The idea is to provide answers as a wall of text, just the way an AI chatbot answers y

Meta has found another place to push its eponymous AI, after injecting it as a standalone chat character in the world’s most popular messaging app. Th

We are a comprehensive and trusted information platform dedicated to delivering high-quality content across a wide range of topics, including society, technology, business, health, culture, and entertainment.

From breaking news to in-depth reports, we adhere to the principles of accuracy and diverse perspectives, helping readers find clarity and reliability in today’s fast-paced information landscape.

Our goal is to be a dependable source of knowledge for every reader—making information not only accessible but truly trustworthy. Looking ahead, we will continue to enhance our content and services, connecting the world and delivering value.